State Estimation with YOLO

In this project, I built an MOT pipeline using YOLO models, a powerful Extended Kalman Filter (EKF), and an Intersection Over Union (IoU) association approach to track objects using public driving data. I deployed the algorithm in the Robot Operating System (ROS) middleware, leveraging its extensive ecosystem of packages and tools as an off-the-shelf C++ solution.

Check out the video above to see the working demonstration!

Background & Motivation

Where Kalman Filters Shine

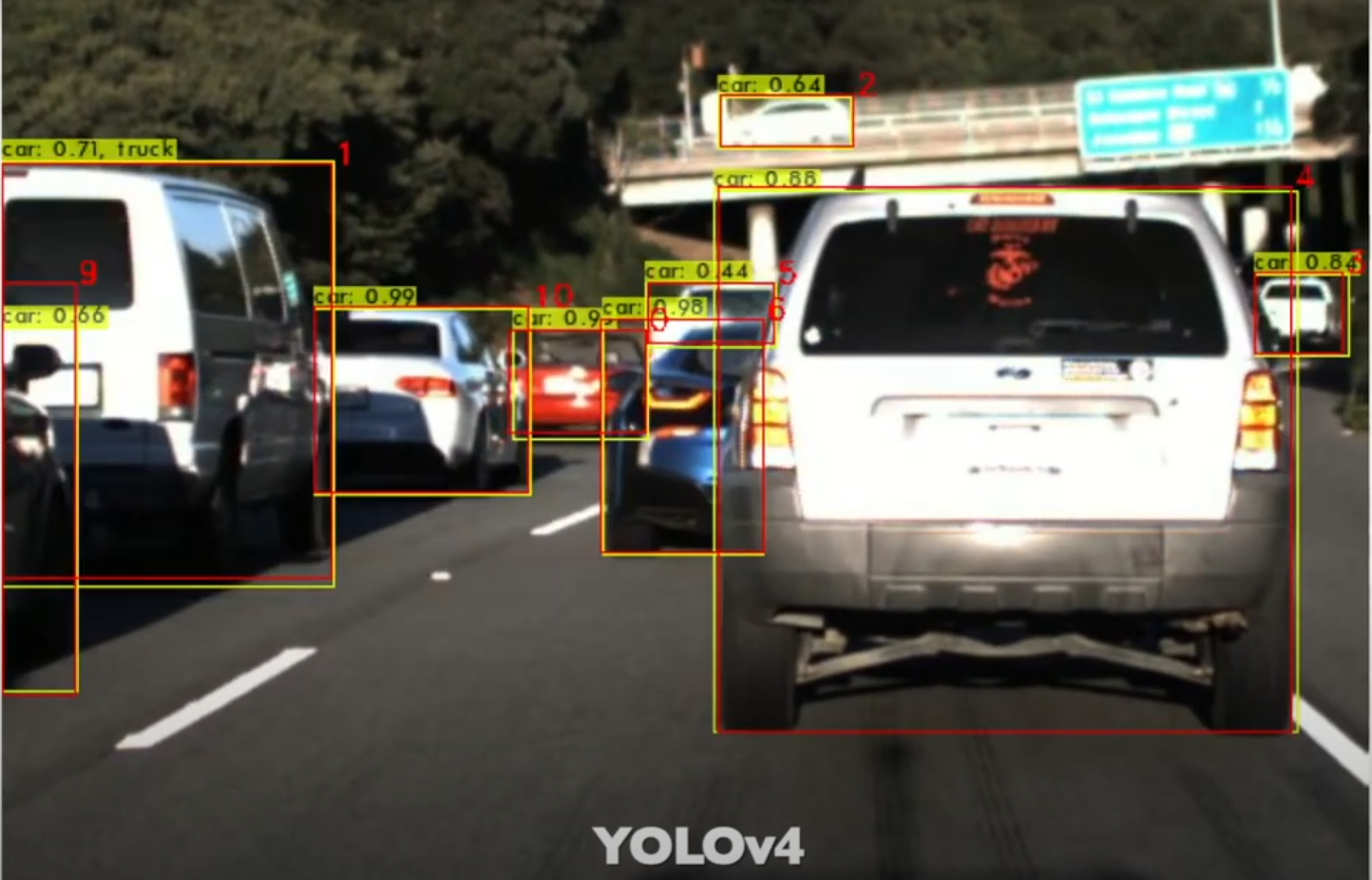

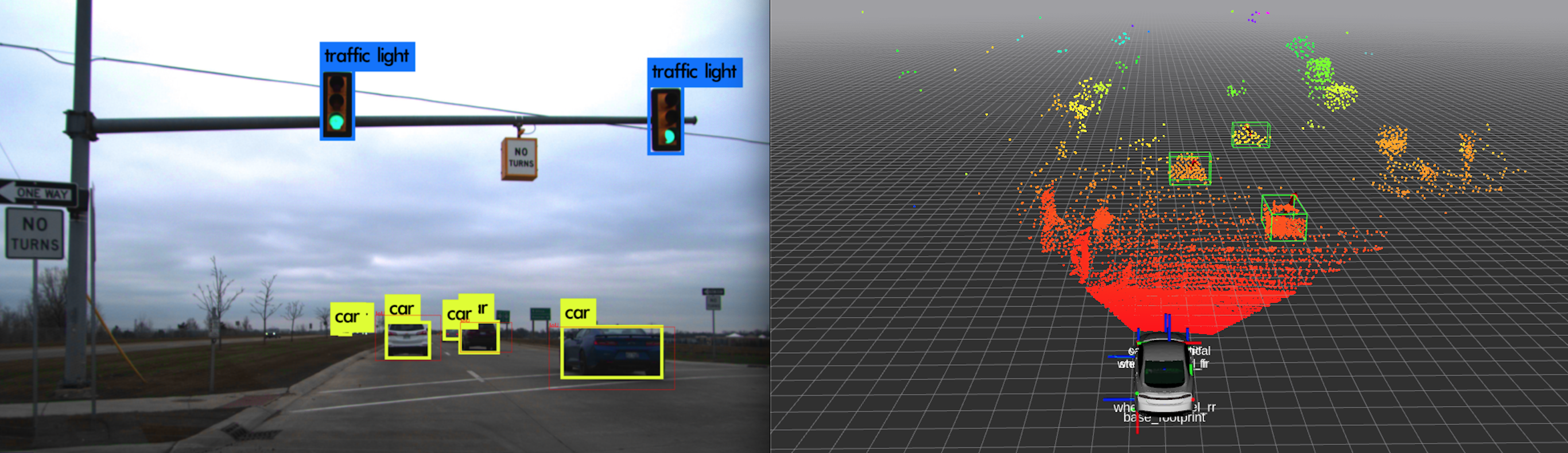

In Multi-Object Tracking (MOT), we often deal with data from multiple sources, such as camera feeds or LiDAR scans shown above, where each object needs a unique identifier to be tracked across frames. However, using raw sensor data directly for tracking has several limitations:

- Noise: Sensor data is often noisy, which can result in inaccurate detections.

- False Positives: Misidentifications can lead to tracking errors and identity switches.

- Momentary Occlusions: Objects can temporarily disappear from view, especially in cluttered environments, leading to gaps in tracking.

- High Computational Load: Processing raw sensor data requires significant computational resources, particularly in dense scenes or with high-resolution inputs.

To address these challenges, we introduce the Kalman Filter. This filter maintains a state (e.g., location, speed) for each tracked object and continuously updates this state as new detections are received. Here’s how the Kalman Filter enhances MOT:

- Smoothing: The Kalman Filter smooths over inconsistencies in detections, providing a stable estimate of an object’s position even when detection quality fluctuates.

- Handling Occlusions: By predicting an object’s future state, the Kalman Filter can “fill in” missing detections, maintaining a coherent track even when objects are briefly occluded.

- Improved Identity Consistency: The filter’s predictive capabilities reduce the likelihood of ID switches, which are common with simpler tracking methods.

- Early-Stage Sensor Fusion: Kalman filters can leverage multiple sensor inputs to provide a more robust and timely estimate. For example, a GPS on a car offers accurate location information but may update slowly or drop updates in tunnels. By fusing GPS data with faster sensor inputs, such as IMU (Inertial Measurement Unit) data and Wheel Speed Sensors, the Kalman filter can fill in gaps during GPS dropouts and maintain a continuous, accurate track.

MOT in Late-Stage Track Fusion

Late fusion, in the context of Multi-Object Tracking (MOT), is a technique for combining information from various tracks after each sensor has independently processed its data.

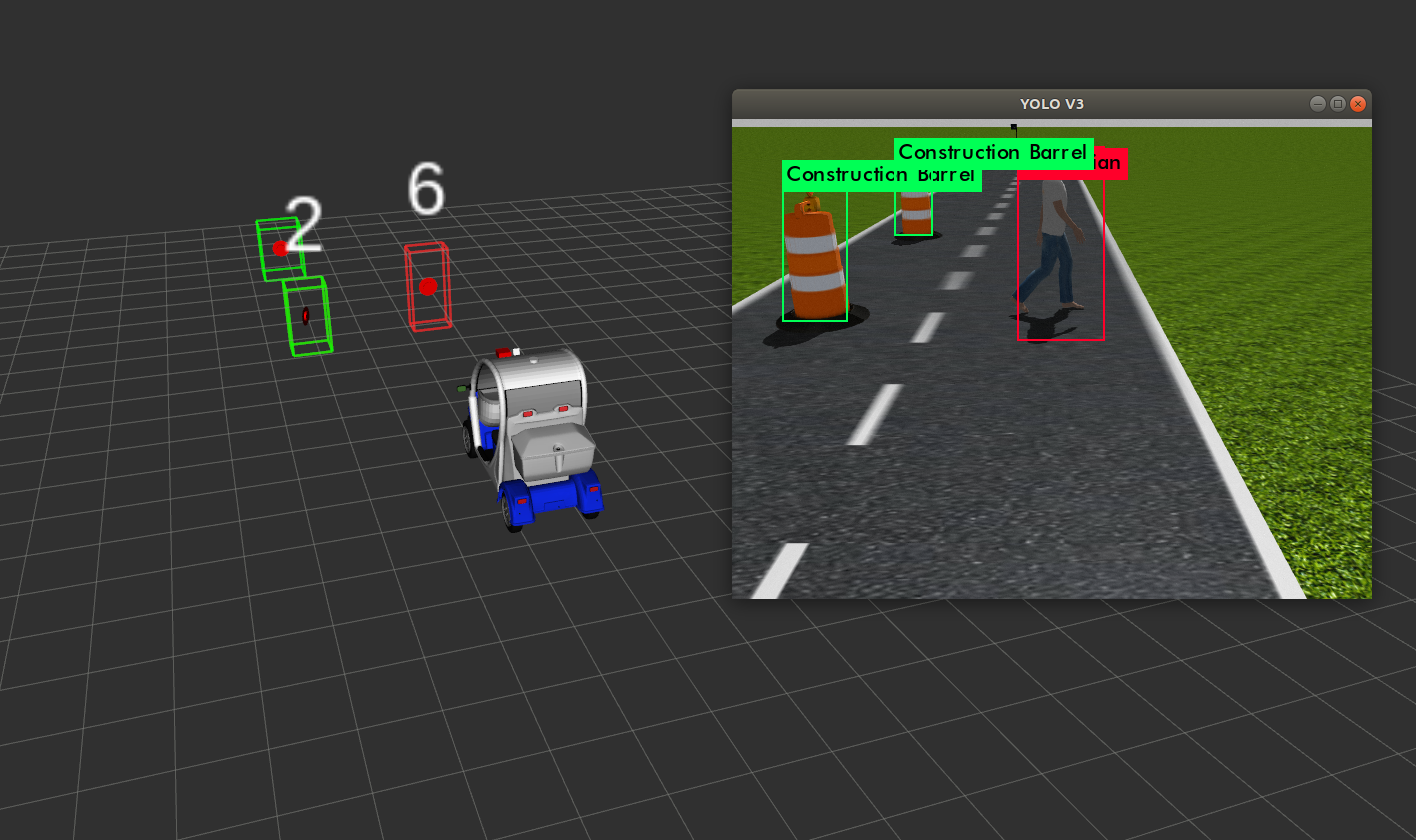

Above is an example from my previous work. I used YOLO to detect and classify objects in video feeds and fused these with 3D tracks from LiDAR. By combining the tracks of each sensor we get a more refined understanding of the objects in the scene to allow for more complex decision making.

An advantage of late fusion is that it enables flexibility and adaptability, allowing each detection system to operate optimally in specific conditions.

Multi-Object Tracking Pipeline

Here’s a breakdown of the key steps in my MOT pipeline:

- Detection: YOLO detects objects in each frame, providing bounding box coordinates.

- Association: Matches new detections to existing tracks based on IoU, ensuring consistency over frames.

- Track Identity Creation & Destruction: Initiates a new track for unseen objects and removes tracks when objects are lost or occluded.

- Estimation: EKF predicts object motion, smoothing detected positions and mitigating sudden movement changes, I used the formulas from article.

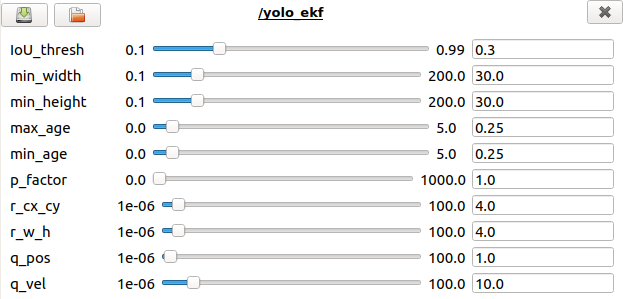

A big advantage of integrating this system in ROS is that it allows developers to tune parameters on the fly using playback or live data. The picture above highlights the crucial configurations that were needed to tune the MOT system. I explain these in more detail in the relevant section of my project Github page.

Challenges, Alternatives, and Final Thoughts

Building a Multi-Object Tracking (MOT) pipeline with the Extended Kalman Filter (EKF) and YOLO was both rewarding and challenging. While the Kalman Filter provides robust tracking capabilities, it has limitations, particularly during extended occlusions or situations where objects exhibit irregular motion. These can lead to issues like ID swaps, where object identities are lost or confused during tracking.

Alternatives to classical methods like EKF include approaches such as SORT (Simple Online and Realtime Tracking), which leverages the Hungarian Algorithm for better association, reducing the likelihood of ID swaps. For a more resilient solution, DeepSORT incorporates feature embeddings from a Recurrent Neural Network (RNN), significantly enhancing tracking accuracy, especially in dense and complex scenes. You can learn more about DeepSORT here.

Implementing and refining these techniques gave me a deep appreciation for the challenges of real-world tracking and the powerful open-source tools that support innovation in this field. I hope my project can inspire others!